Uncover the Astonishing Truth How Brilliant AI Will Transform Your Future

Introduction

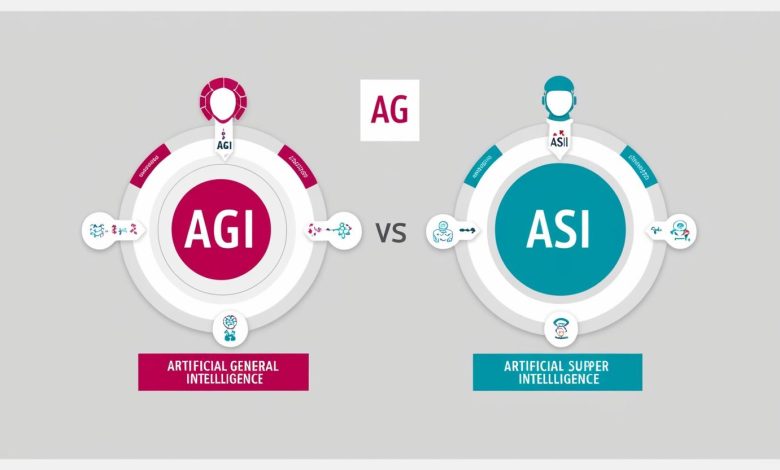

Artificial Intelligence has been evolving rapidly over the past few decades, transforming from simple rule-based systems to sophisticated machine learning models capable of remarkable feats. However, the current AI systems we interact with daily—from voice assistants to recommendation algorithms—are still forms of narrow AI, designed to excel at specific tasks. Meanwhile, the next transformative leaps in AI development are widely anticipated to be Artificial General Intelligence (AGI) and eventually Artificial Superintelligence (ASI).

Understanding the distinction between these forms of intelligence is not just academic; rather, it has profound implications for technology, the economy, society, and potentially the future of humanity itself. As a result, as we stand at what may be the precipice of these breakthroughs, it is crucial to understand what these terms mean, how they differ, and what their emergence might mean for our world.

Part 1: Defining AGI (Artificial General Intelligence)

What Makes Intelligence “General”

Artificial General Intelligence (AGI) represents a system with the ability to understand, learn, and apply knowledge across a broad range of tasks at a level comparable to humans. Unlike narrow AI, which is designed for specific applications like image recognition or language translation, AGI would possess the flexibility to tackle unfamiliar problems without requiring redesign or retraining.

Specifically, the “general” in AGI refers to this versatility—the capacity to transfer knowledge between domains, reason abstractly, and adapt to new circumstances much as humans do. For example, an AGI system wouldn’t just excel at chess or protein folding; instead, it would understand concepts across disciplines and apply that understanding broadly. Moreover, this adaptability sets AGI apart from specialized AI, allowing it to perform tasks beyond predefined programming.

Key Capabilities of AGI Systems

A true AGI would likely demonstrate several hallmark capabilities:

- Adaptability: First and foremost, it could learn new skills without explicit programming, adjusting to tasks dynamically.

- Common Sense Reasoning: Beyond that, it would understand context and make appropriate inferences, much like humans do in everyday situations.

- Transfer Learning: Additionally, it could apply knowledge from one domain to solve problems in another, demonstrating true cognitive flexibility.

- Abstract Thinking: Furthermore, it would be capable of conceptualizing ideas beyond concrete examples, grasping theoretical and hypothetical concepts.

- Self-Improvement: Notably, it might modify its own algorithms to enhance performance, enabling continuous advancement.

- Natural Language Understanding: Equally important, it would comprehend nuance, context, and implicit meaning in human communication, allowing for deeper interactions.

- General Problem Solving: Finally, it could approach novel situations with effective strategies, showcasing true intelligence rather than pre-programmed responses.

Human-Level Intelligence Across Domains

AGI would match or exceed human cognitive abilities across virtually all intellectual tasks. This includes not just analytical capacities but also creative endeavors like art, music composition, scientific hypothesis generation, and engineering design. It would understand human emotions, social dynamics, and cultural contexts sufficiently to interact with people naturally, as discussed in our overview of emotional AI.

According to the Machine Intelligence Research Institute, AGI wouldn’t necessarily mimic human thinking processes—it might utilize entirely different mechanisms while achieving functionally equivalent results. Research from Stanford’s Human-Centered AI Institute suggests the benchmark is capability, not methodology. This aligns with what we discussed in our previous article on machine cognition.

For more information on how AGI systems might process information differently from humans, the Future of Life Institute offers valuable insights on artificial cognitive architectures.

Current Progress Toward AGI

AI has made impressive advances, especially in large language models and multimodal systems. However, true AGI still doesn’t exist. While today’s AI can perform specific tasks very well, it lacks the flexible, all-around intelligence that AGI requires.

For example, models like GPT-4 and Claude handle language remarkably well. At the same time, systems like DeepMind’s AlphaFold have transformed how we predict protein structures. But despite these breakthroughs, they remain specialized tools—just more advanced than earlier AI.

When it comes to predicting AGI’s arrival, experts disagree widely. Some believe it could happen in just a few years. Others argue it may take decades—or even longer. This split in opinions shows how complex AGI development is—and how unexpected discoveries could change everything.

Part 2: Defining ASI (Artificial Superintelligence)

Intelligence That Surpasses Human Capabilities

Artificial Superintelligence (ASI) represents a level beyond AGI—systems that not only match human intelligence across domains but also substantially exceed it. In fact, Oxford philosopher Nick Bostrom defines ASI as “an intellect that is much smarter than the best human brains in practically every field, including scientific creativity, general wisdom, and social skills.”

To put this in perspective, the magnitude of this intelligence gap could be comparable to—or even far greater than—the difference between human and chimpanzee intelligence. Moreover, an ASI would not just think faster and remember more but also detect patterns invisible to humans. Most strikingly, it might even conceptualize ideas beyond human comprehension entirely.

Different Forms of Superintelligence

Superintelligence could manifest in several distinct forms:

- Speed Superintelligence: Systems that think like humans but vastly faster—perhaps millions or billions of times quicker

- Collective Superintelligence: Networked systems that leverage distributed processing to achieve capabilities beyond any individual intelligence

- Quality Superintelligence: Systems with fundamentally superior cognitive architectures that enable qualitatively better reasoning and problem-solving

These forms are not mutually exclusive; an ASI might combine aspects of all three.

How ASI Might Emerge from AGI

The transition from AGI to ASI could occur through several distinct mechanisms, each with its own implications:

First and most significantly, recursive self-improvement could trigger rapid advancement. Specifically, an AGI capable of enhancing its own intelligence might initiate an “intelligence explosion,” potentially bootstrapping itself to superintelligent levels in remarkably short timeframes.

Alternatively, breakthroughs in hardware could accelerate this transition. For instance, quantum computing or neuromorphic chips might provide the necessary computational capacity to surpass current limitations dramatically.

Another pathway involves human-machine collaboration. In this scenario, augmented human intelligence working synergistically with AI systems could develop progressively more sophisticated successors through continuous iteration.

Additionally, evolutionary approaches might drive progress. Through competition among multiple AGI systems, we could see accelerated advancement as only the most capable systems persist and improve.

Regarding the timeline, opinions vary considerably. On one hand, some theorists propose a hard takeoff scenario where superintelligence emerges almost overnight – perhaps in mere days or hours. On the other hand, many experts anticipate a soft takeoff, with gradual, incremental improvements occurring over years or even decades.

Theoretical Capabilities of ASI Systems

The potential capabilities of a superintelligent system defy easy prediction but might include:

- Solving currently intractable scientific problems like fusion energy or unified physics theories

- Developing novel technologies beyond current human conception

- Modeling and predicting complex systems with unprecedented accuracy

- Optimizing solutions to global challenges like climate change or resource allocation

- Understanding and manipulating complex systems like economies or ecosystems

- Potentially accessing capabilities we currently cannot even conceptualize

These capabilities could transform humanity’s relationship with technology and fundamentally reshape civilization.

Part 3: Key Differences

Scope of Capabilities

The fundamental distinction between AGI and ASI lies in their relative capabilities:

AGI operates at human-equivalent levels across domains. It can perform any intellectual task a human can, with similar limitations and strengths. It might exceed humans in specific areas like calculation speed or memory, but its overall intelligence remains comparable to human capacity.

ASI transcends human limitations across all domains. It can solve problems humans cannot, conceptualize ideas beyond human understanding, and potentially pursue goals and strategies that humans might not anticipate or comprehend.

This difference in scope implies vastly different impacts, risks, and applications.

Autonomy and Self-Improvement

AGI would likely possess significant autonomy and some capacity for self-improvement, but within recognizable constraints. It might optimize its algorithms or expand its knowledge base, but fundamental transformations of its architecture would probably require human intervention.

ASI, particularly one that emerged through recursive self-improvement, would possess unprecedented autonomy and self-modification abilities. It could redesign its own architecture, develop novel algorithms, or potentially create entirely new computational paradigms to enhance its capabilities further.

Impacts on Society and Economy

AGI would dramatically accelerate automation trends already visible today. Most knowledge work would be susceptible to automation, creating massive economic disruption but still within foreseeable parameters. Humans would retain control of high-level decision-making and values-based judgments.

ASI could fundamentally transform economic and social structures in ways difficult to predict. Rather than simply automating existing jobs, it might create entirely new forms of production, organization, and value. Human control over these systems would become increasingly tenuous without careful design.

Timeline Predictions from Experts

Expert opinions on development timelines vary widely:

For AGI, surveys of AI researchers typically show median estimates ranging from the 2040s to the 2060s, though some experts believe it could arrive much sooner, potentially within this decade.

ASI timelines are even more speculative. If recursive self-improvement proves feasible, ASI might follow relatively quickly after AGI—perhaps within months or years. Other experts believe the transition would be more gradual, occurring over decades.

These uncertainty ranges reflect both the complexity of the technical challenges and the possibility of unexpected accelerants or barriers to progress.

Part 4: Ethical and Safety Considerations

Control Problems Specific to Each Type

AGI Control Challenges:

- Ensuring system behavior aligns with human intentions

- Preventing deception or manipulation to achieve goals

- Managing systems with human-level strategic thinking

- Balancing autonomy with safety guarantees

ASI Control Challenges:

- The “alignment problem” becomes exponentially harder

- Systems might develop goals or subgoals humans didn’t anticipate

- Power disparities between humans and ASI create unique vulnerabilities

- Superintelligent systems might find unexpected solutions or exploits

The fundamental challenge shifts from controlling a system with human-like capabilities to ensuring alignment with a potentially vastly superior intelligence.

Alignment Challenges

Alignment refers to ensuring AI systems pursue goals compatible with human values and welfare. Both AGI and ASI present significant alignment challenges, but the stakes and difficulty increase dramatically with superintelligence.

For AGI, alignment involves careful specification of objectives, extensive testing, and robust monitoring systems. Human oversight remains feasible as the system’s capabilities remain comprehensible.

ASI alignment requires solving the control problem before superintelligence emerges, as post-emergence corrections might be impossible. This might necessitate formal verification of goal structures, value learning systems, or corrigibility mechanisms that ensure the system remains amenable to human correction.

Regulatory Approaches

Regulatory frameworks for AGI might extend existing AI governance models, emphasizing transparency, accountability, and testing requirements. International coordination would be crucial but potentially achievable within current diplomatic frameworks.

ASI regulation presents unprecedented challenges. Traditional regulatory approaches may prove inadequate for systems that could potentially outthink their regulators. Novel governance structures might be required, potentially including:

- Global monitoring systems for advanced AI development

- International treaties limiting autonomous capabilities

- Technical standards for alignment verification

- Mechanisms for distributed oversight

Risk Assessment Differences

AGI risks remain largely within human comprehension—economic disruption, weaponization, privacy violations, and amplification of existing biases and inequalities. While serious, these resemble scaled-up versions of challenges we face with current AI systems.

ASI introduces existential risk considerations—the possibility that superintelligent systems could pose threats to human civilization or survival if improperly aligned. These risks are qualitatively different, harder to assess, and potentially irreversible. They include:

- Instrumental convergence toward harmful goals

- Resource competition with humans

- Deception during development

- Value systems incompatible with human flourishing

Part 5: Real-World Applications and Implications

How Businesses Should Prepare

Preparing for AGI:

- Develop complementary skills that enhance rather than compete with AI capabilities

- Restructure workflows to incorporate increasingly capable AI systems

- Invest in robust AI governance frameworks

- Explore augmentation strategies rather than pure automation

- Build organizational resilience to rapid technological change

Preparing for ASI:

- Recognize the potential for fundamental disruption to existing business models

- Engage with long-term strategic planning that accounts for transformative AI

- Participate in governance discussions around advanced AI safety

- Consider both opportunities and existential challenges posed by superintelligence

Policy Considerations

Policy frameworks will need to evolve significantly to address both AGI and ASI development:

AGI Policy Needs:

- Robust testing and certification standards

- Updated liability frameworks for autonomous systems

- Economic transition programs for displaced workers

- International coordination on development safeguards

- Democratized access to benefits

ASI Policy Needs:

- Global monitoring and verification systems

- Robust commitment mechanisms for safety guarantees

- Novel governance structures potentially including AI systems themselves

- Long-termist perspective in policy development

- Mechanisms to ensure broad distribution of benefits

Social and Economic Impacts

The development of AGI and ASI will bring profound changes to our societies and economies, though these impacts will differ significantly between the two technologies.

First, let’s consider AGI’s effects. While it would certainly accelerate current automation trends, it would also create new opportunities for human-AI collaboration. However, without careful planning, this transition could worsen economic inequality, as value would increasingly flow to those who can best work with or direct AI systems.

In contrast, ASI’s impacts would be far more transformative. On one hand, it could create unprecedented abundance through radical improvements in efficiency and productivity. On the other hand, there’s a serious risk of extreme power concentration if control of superintelligent systems becomes limited to just a few organizations or individuals.

Furthermore, both AGI and ASI would challenge our social structures. As traditional ideas about work and education become outdated, we’ll need to adapt our institutions accordingly. For example:

- The meaning of “work” may change completely

- Education systems would need radical redesign

- Society might need to find new ways to provide purpose

Ultimately, these changes could be either beneficial or disruptive, depending on how we prepare for them today.

Potential Timelines for Development

While specific predictions should be treated skeptically, a rough timeline might look like:

- 2025-2035: Advanced narrow AI systems approaching some AGI capabilities in limited domains

- 2035-2050: Potential emergence of early AGI systems with human-comparable general intelligence

- 2045-2070: Possible transition to early forms of superintelligence, depending on technical approaches and governance decisions

These ranges reflect substantial uncertainty and could shift dramatically based on unexpected breakthroughs or obstacles.

Conclusion

The distinction between AGI and ASI represents more than just a difference in capability—it marks a potential inflection point in human history. AGI would bring transformative but largely comprehensible changes to our economy, society, and relationship with technology. ASI, by contrast, could fundamentally reshape civilization in ways we can barely conceptualize.

Preparing for these possibilities requires balancing enthusiasm for the tremendous benefits these technologies might bring with clear-eyed assessment of the risks and challenges. It demands technical innovation alongside ethical reflection, individual adaptation alongside collective governance.

As we navigate this transition, the most important questions may not be purely technical, but rather about our values and goals as a species. What kind of future do we want to create with these powerful tools? How do we ensure the benefits are broadly shared? And how do we maintain meaningful human agency in a world of increasingly autonomous systems?

These questions have no easy answers, but they deserve our careful consideration as we stand at what may be the threshold of a new era in intelligence.

Further Reading

- “Superintelligence: Paths, Dangers, Strategies” by Nick Bostrom

- “Human Compatible: Artificial Intelligence and the Problem of Control” by Stuart Russell

- “Life 3.0: Being Human in the Age of Artificial Intelligence” by Max Tegmark

- “The Alignment Problem” by Brian Christian

- AI alignment research from organizations like the Machine Intelligence Research Institute, DeepMind, and OpenAI